Now is the time to defend the final haven for privacy: your brain

One of the principal concerns of privacy is to prevent others – typically governments or companies – from monitoring what we think. They have to do that indirectly, by spying on what we say or write, and inferring what is going through our minds from that data. We assume that our actual thoughts are immune from surveillance. That is true today, but may not be tomorrow. Work is underway in research and corporate laboratories to come up with ways of reading directly what we are thinking. The question is: what happens to privacy once that is possible?

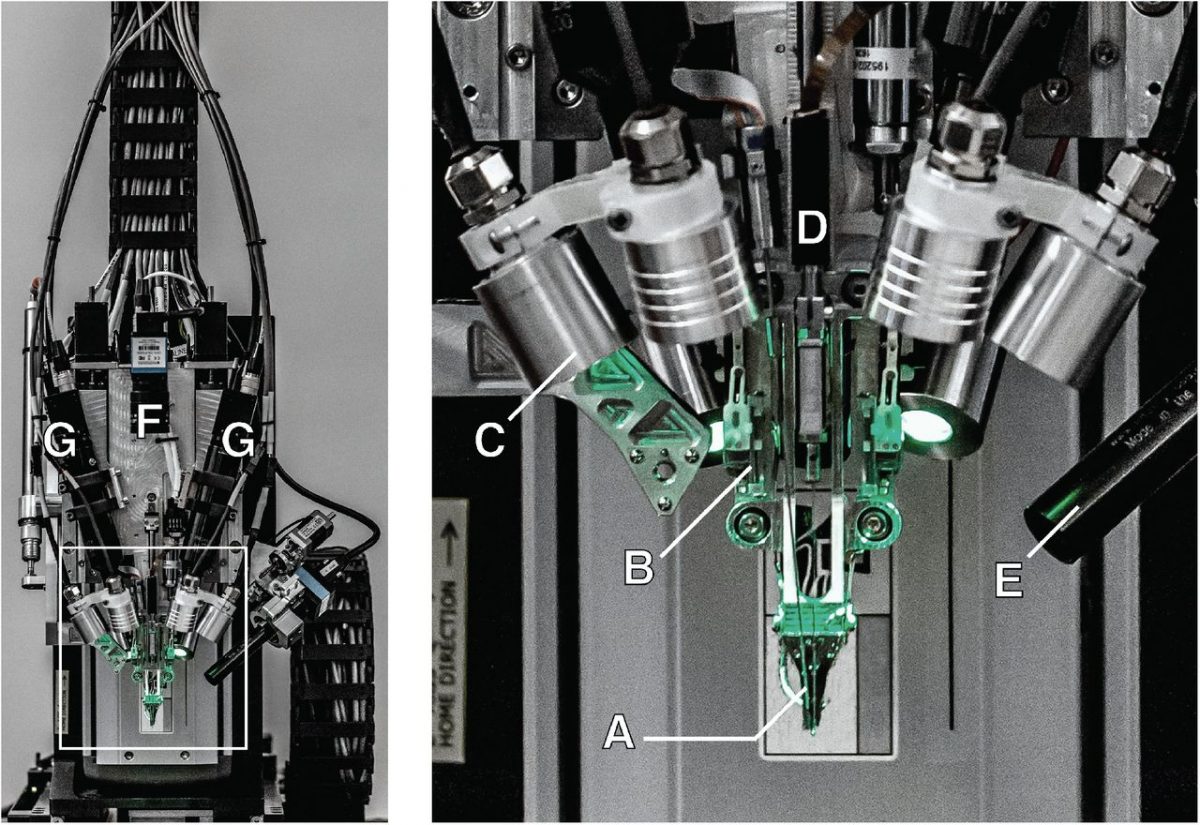

As a foretaste of what is coming, Elon Musk recently unveiled a brain-machine interface based on tiny threads that are inserted directly into the brain. An academic paper by Musk, published on the preprint server bioRxiv, explained how that was achieved:

We have also built a neurosurgical robot capable of inserting six threads (192 electrodes) per minute. Each thread can be individually inserted into the brain with micron precision for avoidance of surface vasculature and targeting specific brain regions.

That’s clearly an invasive approach. So far, it has only been used on rats and monkeys. One of its intended uses is to help people suffering from paralysis regain lost abilities. Before it can be used on humans, permission will be required from the relevant authorities for further experiments and testing, so the practical application of this approach is clearly quite a way off. Less invasive, and less ambitious, is a new Facebook project to develop a brain-computer interface:

We are working on a system that will let people type with their brains. Specifically, we have a goal of creating a silent speech system capable of typing 100 words per minute straight from your brain – that’s five times faster than you can type on a smartphone today.

Facebook insists that privacy will not be at risk, because the aims of the project are strictly limited. It says it is about “decoding those words you’ve already decided to share by sending them to the speech center of your brain”, rather than reading your general neural activity. However, once brain-computer interface technology starts to advance, it is inevitable that researchers will want to go further by trying to eavesdrop on all of our thoughts and feelings. One reason that’s likely is because there are already companies keen to understand our subconscious response to advertising – a new field known as neuro-marketing. Here’s the theory behind it:

the brain expends only 2 percent of its energy on conscious activity, with the rest devoted largely to unconscious processing. Thus, neuromarketers believe, traditional market research methods — like consumer surveys and focus groups — are inherently inaccurate because the participants can never articulate the unconscious impressions that whet their appetites for certain products.

Given these financial incentives to push this kind of technology to the point where it can listen to not just our conscious thoughts, but our unconscious impressions as well, it is important to start considering the implications for privacy. Work has already begun in this area. For example, in 2017, a paper was published with the title “Towards new human rights in the age of neuroscience and neurotechnology“.

This paper assesses the implications of emerging neurotechnology applications in the context of the human rights framework and suggests that existing human rights may not be sufficient to respond to these emerging issues. After analysing the relationship between neuroscience and human rights, we identify four new rights that may become of great relevance in the coming decades: the right to cognitive liberty, the right to mental privacy, the right to mental integrity, and the right to psychological continuity.

The section on the right to mental privacy is extensive, and raises many issues that will become increasingly important as neurotechnology advances. For example, it notes that privacy protection typically applies to the information we record and share. However, in the context of advancing neurotechnology, protection is also needed for the underlying neural activity that underpins that information. Similarly, unconscious neural activity would need protection as well as the conscious elements. One suggestion in the paper is that perhaps we need a right to limit how much of our brain’s activity is accessed, to give us control over what is shared from within our heads to the outside world.

The paper also discusses whether such a right to mental privacy would be absolute or relative. That is, whether it must be preserved under all circumstances, or whether there may be extreme situations – for example involving serious crimes or terrorist attacks – where an individual could be compelled by courts or the state to undergo brain-level interrogations. Forcibly reading someone’s mind using neurotechnology would clearly represent a major step beyond what is possible or permissible today. A related issue is the legal status of such neural-level interrogation:

whether the mere record of thoughts and memories – without any coerced oral testimony or declaration – is evidence that can be legally compelled, or whether this practice necessarily requires the ‘will of the suspect’ and therefore constitutes a breach of the privilege against forced self-incrimination.

If mental privacy is not absolute, it is easy to imagine calls for mental states to be accessed and used as evidence in trials of serious offenses. It would be the ultimate “if you have nothing to hide, you have nothing to fear” argument applied at the cellular level. That is, if you are innocent, you won’t have any neural states that would indicate your guilt. The trouble is, we still know so little about how the brain works that such a statement would be completely unjustified based on our present understanding. Nonetheless, the time to consider whether this approach should be allowed is now, when we can still define and protect neural privacy, not years down the road when we have already lost it.

Featured image by Neuralink.