Poland Opens GDPR Investigation into ChatGPT and OpenAI amid Mounting Privacy Concerns

Poland’s Personal Data Protection Office is investigating a GDPR complaint against ChatGPT and the company behind it, OpenAI. According to the Polish data protection agency:

the complainant turned to the Polish supervisory authority after his requests relating to the exercise of his rights under GDPR were not completed by OpenAI. As it turns out, ChatGPT generated inaccurate information about the complainant in response to his request. In turn, the request for its rectification was not fulfilled by OpenAI, despite the fact that every controller is obliged to process accurate data. It was also not possible for the complainant to find out what data ChatGPT was processing about him at all.

It’s not surprising that ChatGPT has been accused of breaching the EU’s main privacy law – PIA blog noted that ChatGPT was a privacy disaster waiting to happen back in February. As the first complaint to be taken up by an EU data protection agency, this case will be watched closely by other EU Member States, and around the world. The Polish inquiry is likely to investigate many of the key GDPR issues that arise for AI programs and be used as a benchmark in future legal cases.

The Polish Personal Data Protection Office is the first formal inquiry, but not the first time an EU data protection agency has expressed concerns. Italy’s data protection agency, Il Garante per la protezione dei dati personali, blocked access to ChatGPT in the country precisely because of concerns that it violates the GDPR. A few weeks later, the Italian data protection authority allowed OpenAI to offer ChatGPT once more, following the implementation of a number of changes designed to address privacy concerns. However, that swift approval meant that the core GDPR issues were not explored in full detail – something the new Polish investigation now has a chance to do.

Italy was not the only EU country to begin exploring whether ChatGPT and OpenAI complied with the GDPR. The Spanish Data Protection Agency began preliminary investigatory proceedings against OpenAI for possible non-compliance with the regulations. Although no more information has been released, it is significant that one month later, the authorities of the Ibero-American Network for the Protection of Personal Data, a group of 16 data protection authorities from 12 countries, mostly from Latin America, began their own joint investigation of the following aspects of ChatGPT (translation via DeepL):

the legal bases for such processing, the information provided to the user on the processing, the exercise of the rights recognised in the data protection regulations, the possible transfer of personal data to third parties without the consent of the owners, the lack of age control measures to prevent minors from accessing its technology, and not knowing whether it has adequate security measures for the protection and confidentiality of the personal data collected.

In France, the data protection agency CNIL issued what it calls an AI “action plan” based on four main strands:

- understanding the functioning of AI systems and their impact on people;

- enabling and guiding the development of privacy-friendly AI;

- federating and supporting innovative players in the AI ecosystem in France and Europe;

- auditing and controlling AI systems, and protecting people.

The same month, the UK Competition and Markets Authority announced a more general “initial review of competition and consumer protection considerations in the development and use of AI foundation models.”

In North America, Canada began an investigation into OpenAI in response to a complaint alleging “the collection, use and disclosure of personal information without consent.” The US has been lagging somewhat compared to this background of increasing regulatory scrutiny around the world. Following a complaint from the Center for AI and Digital Policy, an AI think tank, the Federal Trade Commission opened in July what the Washington Post calls an “expansive” investigation into OpenAI, “probing whether the maker of the popular ChatGPT bot has run afoul of consumer protection laws by putting personal reputations and data at risk.”

Although the investigations mentioned above already seem like something of an onslaught against OpenAI and ChatGPT, they are likely to be just the start. For example, the body overseeing the application of the GDPR, the European Data Protection Board, set up a dedicated ChatGPT task force in April with the aim of fostering cooperation between EU data protection agencies, and to exchange information on possible enforcement actions against the AI company.

Moreover, beyond the GDPR, there is the new EU AI Act, which is specifically designed to regulate the use of AI, and to protect the public from possible harm. As we wrote in June, the AI Act includes many important protections for privacy, and there are measures specifically designed to apply to the latest generation of AI companies (as made clear by the European Parliament’s press release on the new AI Act).

It would be an understatement to say that the world of AI is fast moving. It’s impressive that for perhaps the first time, data protection authorities around the world are moving almost as rapidly. As a result, we can expect to see more investigations into the new generation of AI systems, and probably some major fines too, not least in the EU.

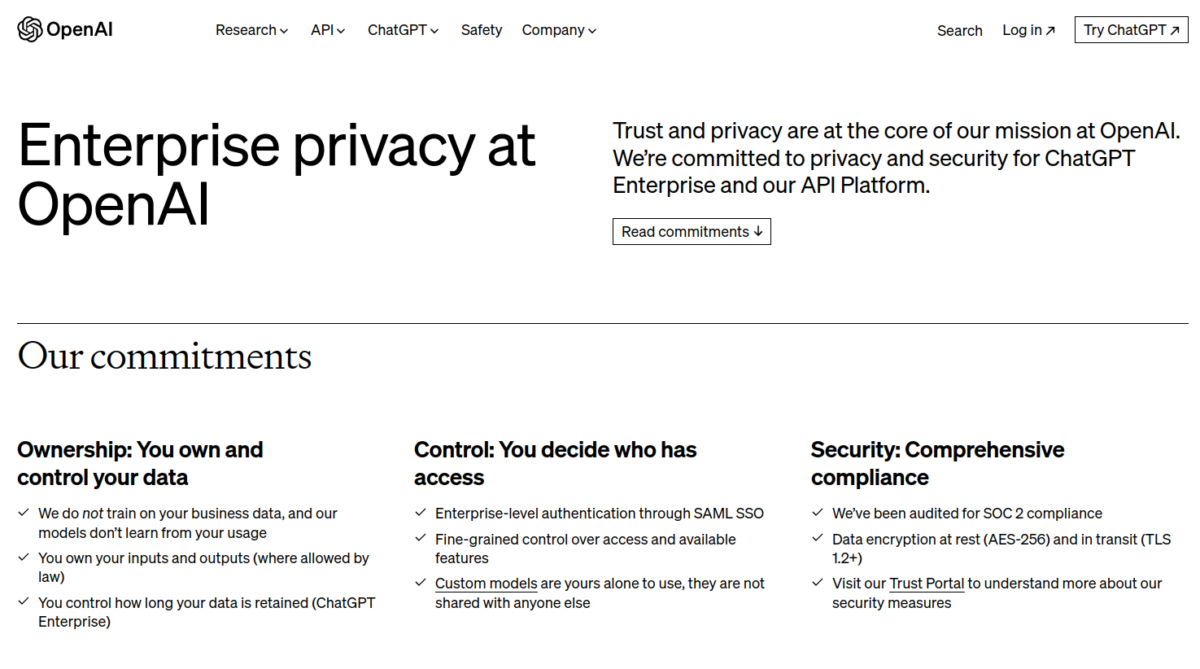

Featured image by OpenAI.