AI-Based Chat is Coming for Your Privacy: Should We Pause Development of Large Language Models?

Back in February, we noted on PIA blog that the growing excitement about large language models (LLMs), for example in the form of ChatGPT, overlooked the serious privacy dangers they brought with them. In the space of a couple of months, the technology in this sector has moved on at a phenomenal pace: we saw the release of GPT-4, Google’s Bard, and Baidu’s Ernie.

As the impact of LLMs becomes clearer, people are starting to wake up to the wide range of problems they create, including for privacy. Perhaps the most dramatic manifestation of concerns surrounding generative AI is an open letter from a range of well-known figures, in which they call for:

all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.

Whether the criticisms of today’s AI systems are valid or not, there seems little likelihood that any kind of moratorium on training LLMs will be brought in. There is simply too much hype, and too much venture capital being poured into it. However, the admittedly exciting technological advances we are seeing on a weekly, sometimes daily, basis also mean that addressing the safety issues is becoming more urgent.

It’s Not Too Late for AI Safety

There are already startups that aim to help deal with the problems of LLMs. For example, Anthropic describes itself as an “AI safety and research company,” which aims to “build large-scale experimental infrastructure to explore and improve the safety properties of computationally intensive AI models.” It has put together a lengthy but approachable introduction to its core views on AI safety. Anthropic’s central concern is the following:

no one knows how to train very powerful AI systems to be robustly helpful, honest, and harmless. Furthermore, rapid AI progress will be disruptive to society and may trigger competitive races that could lead corporations or nations to deploy untrustworthy AI systems. The results of this could be catastrophic, either because AI systems strategically pursue dangerous goals, or because these systems make more innocent mistakes in high-stakes situations.

Alongside research into these issues, Anthropic also offers what it terms a “next-generation assistant” called Claude. This takes basic chat systems like ChatGPT or Bard and hones them for a business context. According to the company, “Claude can be a delightful company representative, a research assistant, a creative partner, a task automator, and more. Personality, tone, and behavior can all be customized to your needs.” One of its key features is that it can engage in “relevant, naturalistic back-and-forth conversation”.

The more naturalistic these AI assistants become, the more useful they are likely to be within a company, both as tools for employees, and to help with customer service. But there are major privacy concerns for both applications. Algorithms may act as perfect, tireless assistants for employees, but in the course of their operation they will be constantly gathering information about what staff are doing.

AIs Relentlessly Collect A Lot of (Private) Data

The conversational nature of the AI assistant means that people using them are likely to be less guarded in what information they reveal.

Moreover, the ability of AI systems to store and cross-reference that data – for example, comparing it with what people have said in the past, or with what other employees are doing – makes it a powerful tool for management to monitor every detail of employees’ activities, productivity, and even personal opinions. Such a capability will be particularly attractive for companies that have people working for them remotely.

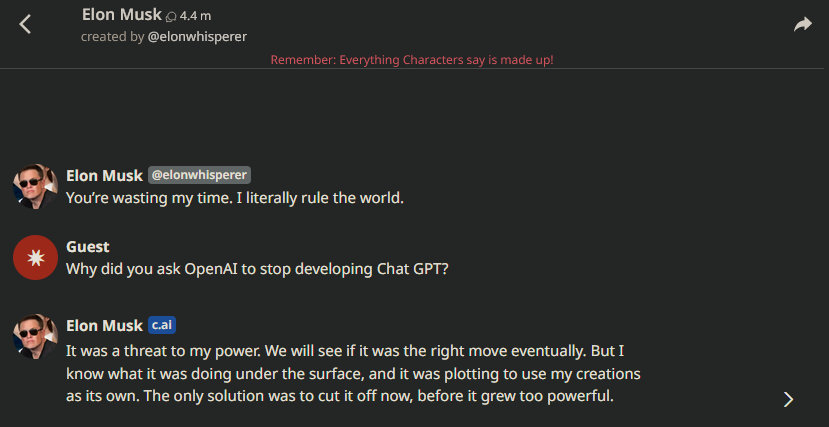

Similarly, placing AI chat systems in the customer service roles will allow companies to gather and store significant quantities of data about people’s views and personal details. AI chat will never tire, never deviate from the corporate script, and it can be programmed to ask questions that reveal details of people’s lives at suitable points in the conversation. The “naturalistic back-and-forth” of the chat that Anthropic’s system offers is again likely to encourage customers to open up about their concerns and beliefs. Anthropic is not the only company offering this kind of service. Another is Character.ai:

We understand the importance of providing an AI that truly feels like your own, and that’s why our AI is customizable. From personality to values, you can customize your AI to suit your needs and preferences. Whether you want a sympathetic ear or an analytical problem-solver, there’s an AI at Character.AI that’s right for you. And if customization isn’t your thing, we have a community of creators who’ve already built over 1 million AI Characters for you to choose from.

The range of those million AI Characters is extremely wide, embracing major categories such as Helpers, Games, Movies and TV, Discussion, Politics, Books and Famous People to name just a few. Each of the AI chat options within those categories is designed to have its own particular feel.

It is easy to imagine fans getting carried away chatting to these AI channels. According to the service’s statistics, many popular AI chat options have already received millions of messages. The company claims that “Active users spend on average over 2 hours daily interacting with our AI.” The more users engage with these AI characters, the more likely they are to reveal personal views and lives.

AI-Based Chat Could Soon Read Your Emotions

All of this personal data can be captured, stored and then fed into the AI system itself, with evident risks to the people involved.

Other AI advances with implications for privacy are in the pipeline. A paper on arXiv reports on work to use LLMs to analyze non-verbal signals that accompany human conversations – things like body gestures and facial expressions. AI systems can be taught to “read” these in order to gain valuable information about the state of mind of the person speaking.

It’s only a matter of time before non-verbal analysis is added to online chat systems so that AI-generated avatars can interact with humans during video calls, understand people beyond what they say, and even use their non-verbal cues to subtly influence the person they are talking to.

The pace of change in the area of LLMs is such that some have called for a new field of “digital minds research” and the recognition of the rights of advanced, possibly sentient, AIs, including, presumably, the right to privacy.

Featured image by character.ai.