What happens to privacy once AIs start hacking systems – and people?

Artificial Intelligence (AI) has mostly figured in this blog because of its ability to sift through information – for example, finding patterns in data, or matching faces. But one of the reasons that AI is such a powerful and important technology is that it is completely general: it can be applied to almost anything. As a new paper by the well-known security expert Bruce Schneier explores, one area where AI will have a major impact is hacking, in all its forms. It’s extremely wide-ranging, and well-worth reading in its entirety (there’s also a good summary by Schneier himself) but this post will concentrate on the ways in which AI hacking is likely to impact privacy and data protection. Schneier writes:

One area that seems particularly fruitful for AI systems is vulnerability finding. Going through software code line by line is exactly the sort of tedious problem at which AIs excel, if they can only be taught how to recognize a vulnerability. Many domain-specific challenges will need to be addressed, of course, but there is a healthy amount of academic literature on the topic – and research is continuing. There’s every reason to expect AI systems will improve over time, and some reason to expect them to eventually become very good at it.

If that happens, it will have a huge and direct impact on data protection. Over the last few years, we have already seen massive leaks of personal data caused by people breaking into supposedly secure systems through the use of flaws in the code. Once AIs can spot vulnerabilities in code and online systems, the threat to privacy will increase greatly. That’s because AI systems can scan continuously the entire Internet, seeking tell-tale signs of vulnerabilities that even security experts might miss. Once a new vulnerability is found in a piece of code, it can be exploited by AIs instantly on a massive scale, giving adminstrators little time to patch, even assuming that they become aware of the problem.

Schneier points out that there is, however, an upside to this coming development: the same AI capabilities could be used to find vulnerabilities in code and online systems before they are made available to the public:

Imagine how a software company might deploy a vulnerability finding AI on its own code. It could identify, and then patch, all – or, at least, all of the automatically discoverable – vulnerabilities in its products before releasing them. This feature might happen automatically as part of the development process.

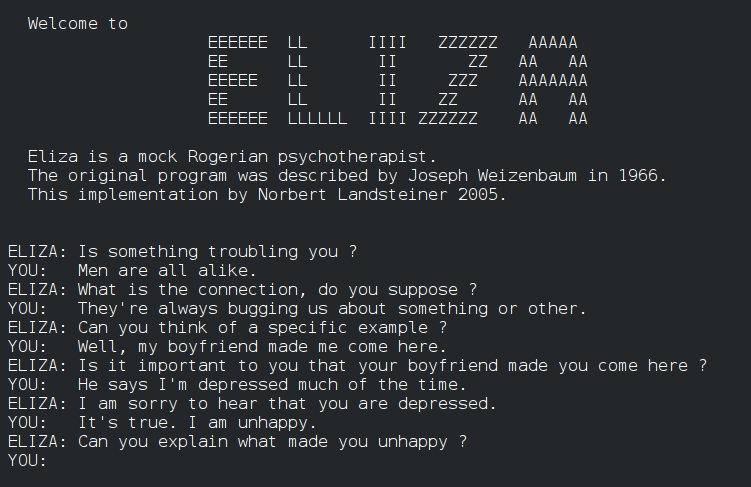

Unfortunately, there is a second kind of AI hack that is not only more pernicious for privacy, but also much harder to protect against. As Schneier reminds us, people have a tendency to ascribe human-like qualities to programs, for reasons that have to do with how humans have evolved. As far back as 1966, Jospeh Weizenbaum created a primitive text-based therapist-mimicking conversational program called ELIZA. Despite the simplicity of its logic, and the crudeness of its output, people were quite happy confiding deeply personal secrets to what they were told was simply a program. Research from 2000 confirmed that a person was likely to share private information with a program if the latter shared an obviously fictional piece of “personal information”. It is not hard to see how today’s more advanced AI systems will be able to extract personal information from people online by posing as real humans:

One example of how this will unfold is in “persona bots.” These are AIs posing as individuals on social media and in other digital groups. They have histories, personalities, and communications styles. They don’t constantly spew propaganda. They hang out in various interest groups: gardening, knitting, model railroading, whatever. They act as normal members of those communities, posting and commenting and discussing. Systems like GPT-3 [which uses deep learning to produce human-like text] will make it easy for those AIs to mine previous conversations and related Internet content and appear knowledgeable.

Schneier points out that such persona bots might occasionally post something about a political, economic or social issue. Once the AI systems have convincingly established themselves as “people”, their views will be accorded at least some respect. Schneier warns: “One persona bot can’t move public opinion, but what if there were thousands of them? Millions?” Persona bots might similarly use their acquired credibility to ask the occasional question about deeply personal issues. Not everyone will answer, but enough might that extremely revealing private data is gathered into a database holding potentially compromising facts about millions of people. Or even billions: once AI systems are good enough to fool most people, most of the time – and remember ELIZA already did something like this 50 years ago – they can be deployed globally on a huge scale.

Whereas the direct AI hacking systems can be used to test and harden the defenses of code and systems before they are deployed, there is no comparable way that AI systems can be used to stop people from falling for persona bots, and revealing intimate information about themselves. Education and warnings about the risk of being duped will only go so far. The problem is that humans have evolved over millions of years as social beings, which means trusting other humans in order to enhance the chance of survival by cooperating in a hostile world. The real threat from AI hacking is that programs will turn what is a key feature of our humanity into a bug that can be exploited and used against us by whoever is controlling the AI.

Featured image from Wikimedia Commons.