AI-based lie detection system will screen travellers to EU for ‘biomarkers of deceit’

As the borders between nations have become increasingly sensitive from a political point of view, so the threats to privacy there have grown. Privacy News Online has already reported on the use of AI-based facial recognition systems as a way of tightening border controls. As software improves, and hardware becomes faster and cheaper, it’s likely that this will become standard. But it’s by no means the only application of AI systems at the border.

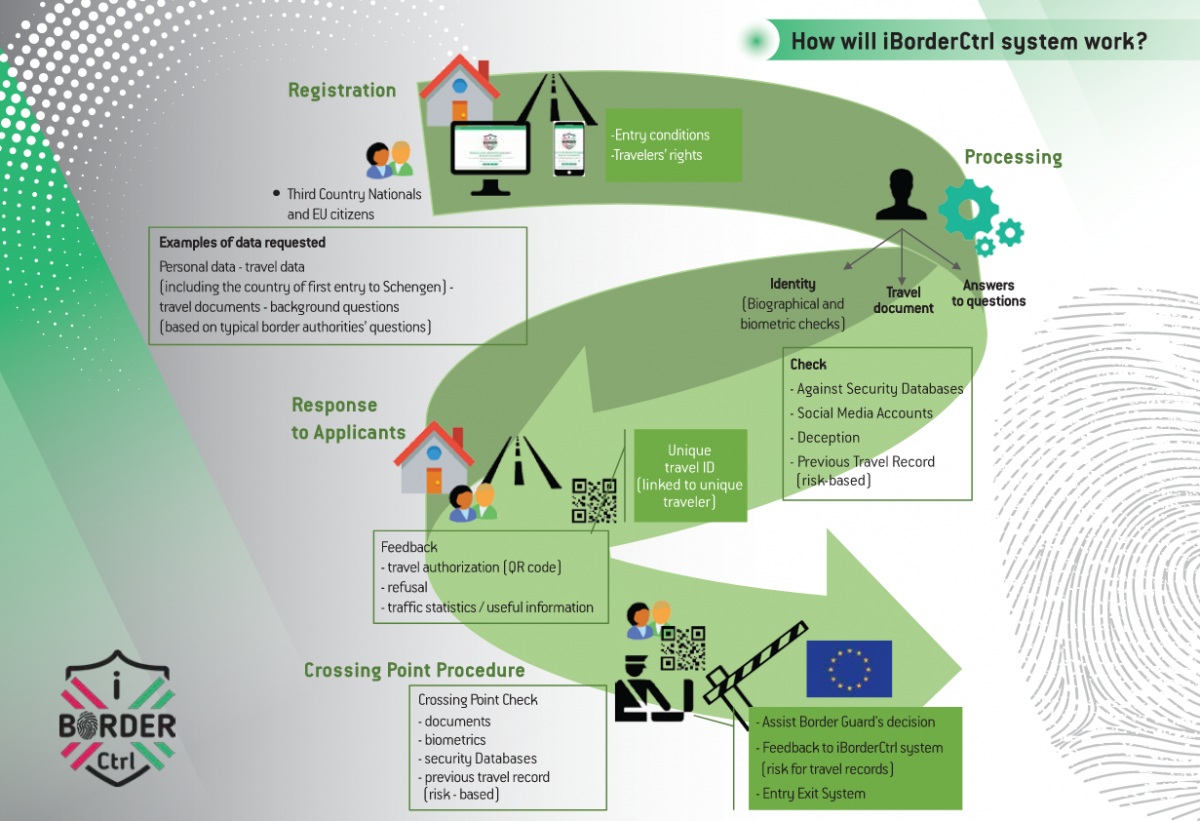

The European Commission has just announced trials in Hungary, Greece and Latvia of iBorderCtrl, a $5.2 million project that includes the use of an AI-based lie detection system to spot when visitors to the EU give false information about themselves and their reasons for entering the area:

iBorderCtrl provides a unified solution with aim to speed up the border crossing at the EU external borders and at the same time enhance the security and confidence regarding border control checks by bringing together many state of the art technologies (hardware and software) ranging from biometric verification, automated deception detection, document authentication and risk assessment. iBorderCtrl designs and implements a comprehensive system that adopts mobility concepts and consists of a two-stage-procedure, designed to reduce cost and time spent per traveller at the land border crossing points: road, walkway, train stations.

There are a number of components to iBorderCtrl, including a biometrics module, a face matching tool, a document authenticity analytics tool and a system that cross-checks a traveller’s information from social media or legacy systems. But the most innovative aspect is the “automatic deception detection system”, which is used in the first of a two-stage procedure.

At the pre-registration step, travellers log on to an online system, accessed from their homes, that gathers relevant information needed to establish whether they should be permitted to cross the border. As part of that step, they are “interviewed” by a virtual border agent. This requires the presence of a camera on a laptop or smartphone so that the system can record and analyze the response of applicants. At the heart of the AI analysis is the use of “micro-expressions” (MEs):

MEs are very brief, subtle, and involuntary facial expressions which normally occur when a person either deliberately or unconsciously conceals his or her genuine emotions. Compared to ordinary facial expressions or macro-expressions, MEs usually last for a very short duration which is between 1/25 to 1/5 of a second”

The theory is that an AI system can be trained using machine learning to spot which micro-expressions are indications that a person is lying. These are termed “biomarkers of deceit” by the iBorderCtrl group, which builds on the earlier “Silent talker” system. Risk analysis based on the pre-registration information – including micro-expressions – is then used to decide who should be subject to stricter controls at the border itself:

Deception detection is a novel approach for border control deployment applications, which if considered as self-deployed through the pre-registration phase approach, it may be able to evaluate travellers, who cannot be evaluated deterministically by other methods, effectively. Thus it may prove to be a key enabler in identifying subjects that border guards should pay special attention to during the actual crossing, and an indicator of those aspects of their travel that are suspicious.

Although the idea of pre-screening travellers is clearly attractive, since it allows border agents to concentrate on potentially high-risk individuals, the approach has been criticized by some experts as “pseudoscience“, as the Guardian reported:

Bruno Verschuere, a senior lecturer in forensic psychology at the University of Amsterdam, told the Dutch newspaper De Volskrant he believed the system would deliver unfair outcomes.

“Non-verbal signals, such as micro-expressions, really do not say anything about whether someone is lying or not,” he said. “This is the embodiment of everything that can go wrong with lie detection. There is no scientific foundation for the methods that are going to be used now.

In addition, a member of the European Parliament, Sophie in ‘t Veld, has submitted a number of written questions to the European Commission about the trials, including: “Has the High-Level Expert Group on Artificial Intelligence given recommendations regarding ethics guidelines for this system and on its impact on the application of the [EU Charter of Fundamental Rights]? If not, why not?”

Despite those doubts, other groups around the world are also developing AI-based systems that will assist border control guards in spotting high-risk behavior. For example, members of the Borders consortium at the University of Arizona are working on a project they call AVATAR – Automated Virtual Agent for Truth Assessments in Real-Time:

There are many circumstances, particularly in a border-crossing scenario, when credibility must be accurately assessed. At the same time, since people deceive for a variety of reasons, benign and nefarious, detecting deception and determining potential risk are extremely difficult. Using artificial intelligence and non-invasive sensor technologies, BORDERS has developed a screening system called the Automated Virtual Agent for Truth Assessments in Real-Time (AVATAR). The AVATAR is designed to flag suspicious or anomalous behavior that warrants further investigation by a trained human agent in the field. This screening technology may be useful at Land Ports of Entry, airports, detention centers, visa processing, asylum requests, and personnel screening.

It’s easy to see why this approach is gaining favor. Controlling borders is a hot political issue, and anything that claims to make it easier and safer will naturally be looked upon with interest by the authorities. But there is a danger here that people will be mesmerized by the use of AI – as if it is guaranteed to produce better outcomes than human judgement. Even though the current pilot schemes discussed above use AI-based lie detection systems as an adjunct to border agents, it is easy to see increasing weight being given to the automated risk analysis. Doing so is the path of least resistance, and undoubtedly makes the challenge of dealing with the growing influx of travellers more manageable.

But as with facial recognition systems, it is important to understand that AI-based analysis is not perfect, and can throw up incorrect evaluations. Moreover, the black box approach of machine learning systems means that it is currently hard to scrutinize such decisions. That is not only bad for transparency, it makes it almost impossible for people to appeal against automated judgements.

A further danger is that as AI-based virtual border guards become deployed more widely, it may be considered acceptable to allow them to probe more deeply into personal matters in order to improve the reliability of their decision-making. It may be argued that since they are “only” AI systems, that privacy is not compromised in the same way it would be were people interrogated by human border agents. After all, this was precisely the argument used by Google when it introduced advertising into Gmail – that it was “only” software that was reading personal messages.

In retrospect, that turned out badly for privacy, since it normalized this kind of invisible surveillance, which has since spread to most major online services, with serious adverse effects. As more advanced AI systems are deployed in a similar manner, it is important not to repeat that mistake, and to ensure that respect for privacy is built in from the start.

Featured image by iBorderCtrl.